Test-Driven Development: why it works and why it doesn’t

A few weeks ago I took part in a small hands-on workshop introducing test-driven development. The workshop consisted in a short presentation followed by a practical exercise: to implement a small function the TDD way.

There was a laptop and we (the participants) would take turns to advance one test-code-refactor cycle at a time. Some sort of pair programming was in place.

The ultimate goal was to implement a function which, given a number, returns the list of its prime factors:

0 –> [], 1 –> [], 2 –> [2], 3 –> [3], 4 –> [2, 2], 5 –> [5], 6 –> [2, 3], 7 –> [7], 8 –> [2, 2, 2], 9 –> [3, 3], …, 12 –> [2, 2, 3], …

Disclaimer

In this article I assume basic knowledge on TDD – especially the paradigm that “TDD is not same as test-first”.

The first glitch: choosing the test cases

It’s not hard to see that not all the test cases are created equal. The “fewer” prime factors a number has, the less information it reveals, hence the less useful for the TDD approach that number is. If we tried only prime numbers as test cases: 2, 3, 5, 7, 11, 13, … then our TDD-derived function would have the following glorious but utterly useless form (I write the code here in Java, we used C# in the workshop):

It’s not hard to see that not all the test cases are created equal. The “fewer” prime factors a number has, the less information it reveals, hence the less useful for the TDD approach that number is. If we tried only prime numbers as test cases: 2, 3, 5, 7, 11, 13, … then our TDD-derived function would have the following glorious but utterly useless form (I write the code here in Java, we used C# in the workshop):

List<Integer> factors(int n) {

final List<Integer> result = new LinkedList<>();

if (n > 1) {

result.add(n);

}

return result;

}

Obviously, the workshop stayed away from such “oddities” and conveniently chose a set of relevant test cases: 2, 3, 4, 6, 10 and 12 (if I recollect correctly). The question is: why choosing those convenient test cases?

The second glitch: the immanence of “intelligent design”

Since Darwin’s times, evolutionists believe that life on Earth is the result of evolutionary processes conditioned by natural selection. Creationists believe that life cannot be explained in full without some external injection of information into biological processes.

Since Darwin’s times, evolutionists believe that life on Earth is the result of evolutionary processes conditioned by natural selection. Creationists believe that life cannot be explained in full without some external injection of information into biological processes.

If we draw a parallel between software and biology, “older” software development is creationist in nature whereas “agile” is more evolutionary. I see TDD as some sort of a darwinian process:

- the software starts from very simple forms (primitive protozoa) …

- … it gets developed by changing the code base (mutations) …

- … to satisfy the tests (natural selection) …

- … with very small steps (rare positive mutations, bringing only a small evolutionary advantage) …

- … and if a change doesn’t pass the test, the code-base gets reverted and another idea is tried out (filter out useless or damaging mutations)

Our workshop worked along the evolutionary lines:

- 0 and 1 returned [] (protozoa)

- 2 and 3 returned a list containing the same number (first positive mutations, more complex animals)

- 4 returned … oops, that returned [2, 2] and the code looked like this:

final List<Integer> result = new ArrayList<>();

if (n > 1) {

if (n > 2) {

result.add(2);

n /= 2;

}

result.add(n);

}

return result;

}

The question is: why the division by 2? There was nothing in the test cases suggesting two, so the choice had a teleological quality which is arguably denied in TDD (it is test-driven development, i.e. “driven by tests”, after all).

The third glitch: when to stop

We went on in this manner with 4, 6, 10 and 12 until the software became something like this:

We went on in this manner with 4, 6, 10 and 12 until the software became something like this:

final List<Integer> result = new ArrayList<>();

for(int i=2; i<=n; ) {

if (n % i == 0) {

n /= i;

result.add(i);

} else {

++i;

}

}

return result;

}

At this point its was obvious that we need to stop because the function satisfies the requirement. Here two big questions arise:

- why stopping at 12? Why not trying numbers around 997 (which is the largest prime number smaller than 1000)?

- how do we know that the function really returns the list of prime numbers? Satisfying a limited set of test cases (albeit non-redundant) does not guarantee that the function satisfies the invariant in all cases

Obviously, I asked these questions and the answers were rather puzzling.

Why stopping at 12?

TDD stops when there is no reason to test further, the problem is fully defined through tests so satisfying the tests means satisfying the problem. Great!

The issue is, for this workshop (any many other similar cases, see below), limiting the test cases at “some limit” (twelve) is arbitrary since there are plenty of numbers beyond it – which may or may not invalidate the implementation. We simply do not reliably know when to stop.

Does this really implement the requirements?

This was a really good one. The answer I got was “if this doesn’t implement the problem, can you give a counter-example?”. Clever, but it doesn’t work that way because absence of failure is no proof of correctness.

Proving an algorithm is correct requires analysing it and simply by looking at the piece of code above, it’s not very obvious why List<Integer> factors(int) collects the prime factors into result and not some other numbers.

Yes, it does, and here’s the proof

It is clear that factors() collects factors (see the if (n % i == 0) statement) but how do we know that variable i contains a prime number when result.add(i) takes place? To see that, let’s do a small “reductio ad absurdum”.

Let’s assume that i is not prime when result.add(i) takes place: i = p*q where 2<=p<i and 2<=q<i. Because i divides n, it means that p divides n – but that is not possible because p<i hence all the divisions through p have been exhausted before the current iteration. The same logic applies to q which means that i is not decomposable into p and q, therefore i is prime, QED.

Is this really TDD?

Maybe, but validating the solution is far from being test-driven: it is a mathematical proof rooted in number theory and algorithm analysis.

In other words, while the tests may help us build the software, they definitely do not prove our software is correct – at least in the cases where the definition of what we need to build is a general invariant that cannot be reduced to a discrete set of test cases.

Why TDD does not work

TDD does not qualify as a general methodology for software development because it runs on some assumptions which aren’t always true:

- The requirements can be represented as a discrete, non-redundant set of tests. While true in many, many cases it is not true in the general case.

- Satisfying the tests amounts to satisfying the requirements. Again, there are requirements not representable as tests (NFRs are just one example).

I am sure there is a lot of empirical evidence to the contrary in the form “I’ve been using TDD for … years”. While practical experience is definitely valuable, it is also limited. Let’s take some extreme examples to illustrate this, and imagine that:

- the inventor of Quick Sort would have chosen TDD. I doubt we’d have Quick Sort today. We can take the same for hash maps, red-black trees and any other dense piece of software we know.

- we develop an automated pricer for vanilla equity options using TDD. I don’t think we would obtain the computational equivalent of the Black-Scholes formula - which won the Nobel Prize in Economics for its creators.

- we develop a device driver, a general library or a piece of system software using TDD. I believe the set of tests driving the development would be either extremely long and unwieldy or insufficient. Why? Because of the enormous environmental state space that the driver/library/system software has to deal with.

Citing empirical and/or personal evidence for any methodology does not make that methodology valid – but it offers a strong case for when the methodology might be a good choice.

If TDD does not work in the general case, when does it work?

Sweet Enterprise, Bah bah bah, Good times never seem so good *)

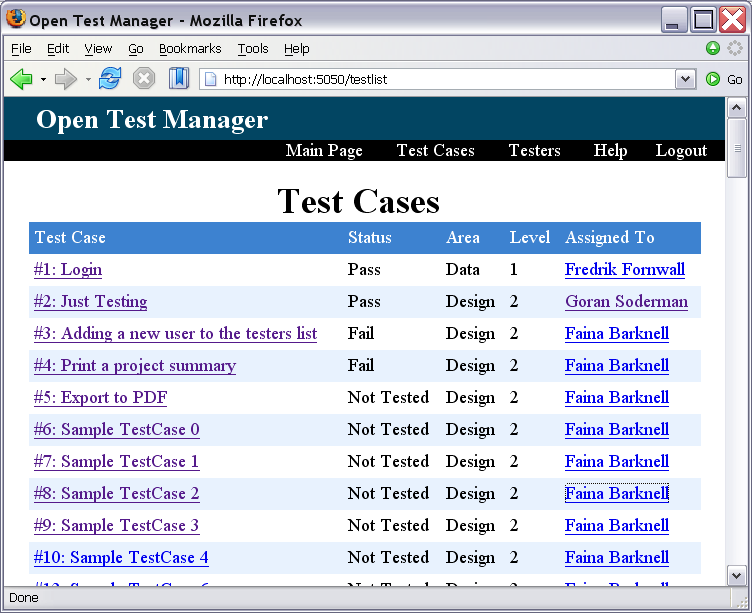

I think that there is one domain where TDD is definitely the way to go and that is enterprise software:

I think that there is one domain where TDD is definitely the way to go and that is enterprise software:

- Requirements can be represented as a discrete, non-redundant set of tests. Enterprise software is by its very nature business, bespoke software. Developers write software to satisfy business needs – which can be listed, analysed and encoded in form of tests that drive development.

- Satisfying the tests largely amounts to satisfying the requirements. The complexity of enterprise software does not reside in its algorithms but in the environment it has to function in – arcane, untidy, sometimes legacy, often buggy. TDD puts an order in the chaos.

Perhaps the strongest argument for using TDD in the enterprise is the abysmal state of quality control in enterprise software. Such state is justified by costs (“this is not Microsoft”) or by the small number of users of enterprise software (although banks, for example, may have tens of thousands of employees and millions of customers).

TDD is a good choice in enterprise development because no matter how soon and in what state the software will be rushed into production, those initial tests remain. Without TDD, the software will be pushed to production anyhow – often without an objective, reliable testimony to its quality.

Final Disclaimer

As I have never used TDD, I do not pretend to understand all aspects of it. To counteract that, I decided to undertake two endeavours:

- To develop a general-purpose Java library using strictly TDD. This library is supposed to implement a generalized form of structural pattern matching. Basically what Scala offers, but stronger, closer to what Prolog gives for free. This exercise is meant to test how suitable TDD is for general-purpose library development.

- To develop a Java EE application from ground-up using strictly TDD. I do not want to disclose the nature of this project now, but it will cover the major J2EE technologies. This exercise will put TDD to test on a less algorithmic but more customer-oriented project.

About the evolution of these two projects on TDD coordinates I will write in future posts.

*) It is a reference to the “Sweet Caroline” song by Neil Diamond. You can read its lyrics here.

Comments

Post a Comment